27 Oct

[ad_1]

Just before Halloween 2021, Roblox engineers experienced a horror story: a service outage that also took down critical monitoring systems. It seemed like the issue was a hardware problem, but it wasn’t. Users were frustrated, and the clock was ticking. After three full days of downtime, service was finally restored on Halloween day.

While the incident itself was an IT nightmare, Roblox’s detailed technical post-mortem several months later was an excellent way to bounce back. The post-mortem was a pleasant, late Christmas gift to users and SysAdmins everywhere.

Thanks to transparency from Roblox, we have an outage case study to learn from. In this post, we’ll summarize the scope and root cause of the outage, explain what other ITOps teams can learn from it, and consider how SolarWinds® Scopify® can help you reduce the risk of extended downtime in your environment.

The scope of the outage

The outage began on October 28, 2021, and was resolved 73 hours later on October 31. The outage affected 50 million Roblox users. While we don’t have a specific dollar cost for the outage, it was a significant incident for Roblox and HashiCorp.

Roblox uses HashiCorp’s HashiStack to manage its global infrastructure. The stack consists of these components:

- Nomad: Schedules containers on specific hardware nodes and checks container health.

- Vault: A secrets management solution for securing sensitive data like credentials.

- Consul: An identity-based networking solution that provides service discovery, health checks, and session locking.

Roblox was one of HashiCorp’s hallmark customers. Therefore, the Roblox outage impacted the reputation of HashiCorp as well. Fortunately, HashiCorp engineers worked with Roblox to troubleshoot and triage the issue, demonstrating their commitment to customer success even when the going gets tough.

The root cause of the outage

From a technical perspective, two issues together formed the root cause of the Roblox outage:

- Roblox enabled a new streaming feature on Consul at a time when database reads and writes were unusually high.

- The BoltDB database, used by Consul, experienced a performance issue.

While the above two factors made up the root cause, several other factors contributed to the length of the outage. For example, because Roblox’s monitoring systems depended on the same systems that were down, visibility was limited.

Additionally, the issues were technically nuanced and took time to debug. Several initial attempts to restore service failed, and one of the recovery attempts (adding faster hardware) may have worsened things.

What can you learn from the outage?

Roblox did a great job making the details of the outage public, which gives other ITOps teams plenty to study. The top four lessons we can learn from the Roblox outage include:

Visibility is vital.

In the Roblox outage, there was an unfortunate chicken-and-egg problem. Roblox had monitoring in place, but the monitoring tooling depended on the systems that were down. Therefore, they didn’t have deep visibility into the problem when they began troubleshooting.

For IT operations, there are two takeaways from this. First, make sure you’re monitoring all your critical infrastructure. Second, mitigate single points of failure that could knock your monitoring offline along with your production systems.

Limit single points of failure.

Roblox was susceptible to this outage because they ran their backend services on a single Consul cluster. They’ve learned from the outage and have since built infrastructure for an additional backend data center. Other ITOps teams should consider doing the same for their critical systems. If it’s mission-critical, aim for N+1 at a minimum. Of course, everything is ultimately a business decision. If you can’t justify the cost of N+1 (or better), ensure you’re comfortable with the downtime risk.

Know your tradeoffs.

Roblox chose to avoid the public cloud for its core infrastructure. After the outage, some questioned if that was a wise choice, but Roblox has made a purposeful decision to stay the course. Public cloud infrastructure isn’t immune to outages, and Roblox considered the tradeoffs. They feel that maintaining control of their core infrastructure is more important than a public cloud’s benefits.

For other organizations, the calculations on the tradeoffs will be different. Weighing the tradeoffs to make a decision based on your preferences, expertise, resources, and risk appetite is what’s important.

Learn from your mistakes.

Continuous improvement leads to long-term success. After the outage, Roblox was transparent about what happened and implemented solutions (like the additional backend infrastructure) to prevent repeat failures. Mistakes happen, and no system is perfect. Teams that recognize this and adopt practices like blameless postmortems tend to do better in the long run.

How Scopify can help

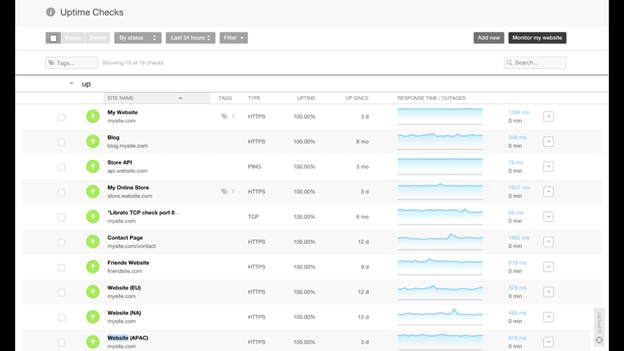

Scopify is a simple but robust website and web application monitoring platform that provides ITOps teams visibility into availability and performance. As a cloud platform, Scopify helps you avoid the chicken-and-egg problem Roblox experienced during its outage. Because Scopify is decoupled from your critical infrastructure, you can limit single points of failure that could take out your servers and monitoring tools at the same time.

Additionally, Scopify can monitor site availability from over 100 locations across the globe. That means your team can detect performance issues and outages that may only affect users in specific geographic areas. It can also make troubleshooting tough-to-diagnose issues easier because you can immediately isolate symptoms based on region. To see what SolarWinds Scopify can do for you, sign up for a free 30-day trial today

[ad_2]

Source link